|

I am a Computer Science Ph.D student at Boston University. I am fortunate to be advised by Prof. Dokyun "DK" Lee and Kate Saenko. I received Masters and Bachelors degrees in Computer Science from Georgia Institute of Technology, where I worked on data-efficient learning and methodologies for human-robot interaction. Email / CV / Google Scholar / Github |

|

|

My research interest mainly focuses on multi-agentic systems. Particularly, I am interested in identifying and mitigating bias and misinformation in foundation models. |

|

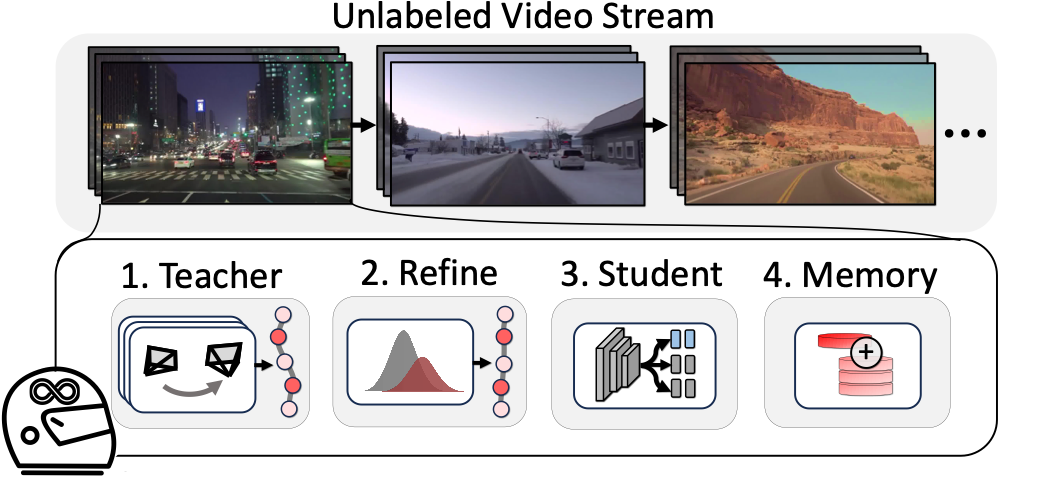

Lei Lai, Eshed Ohn-Bar, Sanjay Arora, John Seon Keun Yi CVPR, 2024 project page / code / paper Continuously learning to drive from an infinite flow of YouTube driving videos. |

|

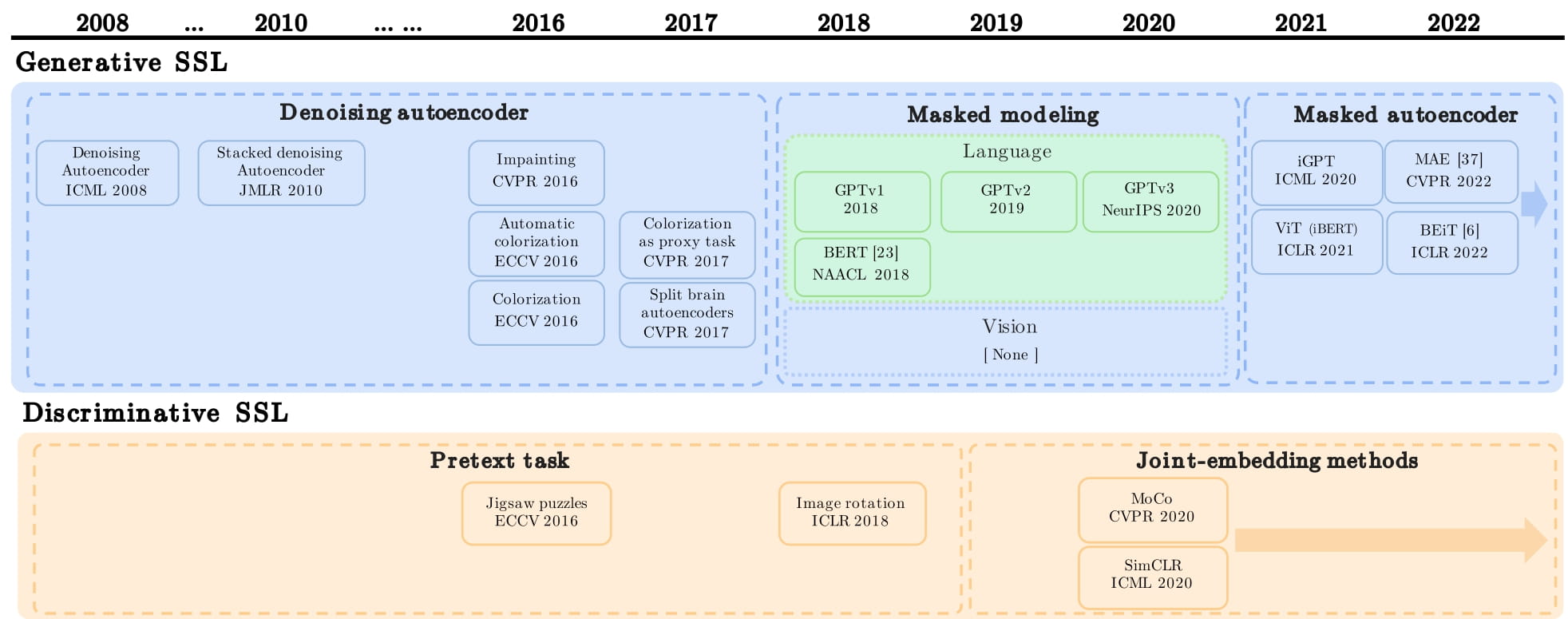

Chaoning Zhang, Chenshuang Zhang,, Junha Song, John Seon Keun Yi, In So Kweon IJCAI, 2023 arXiv A comprehensive survey on Masked Autoencoders(MAE) in vision and other fields. |

|

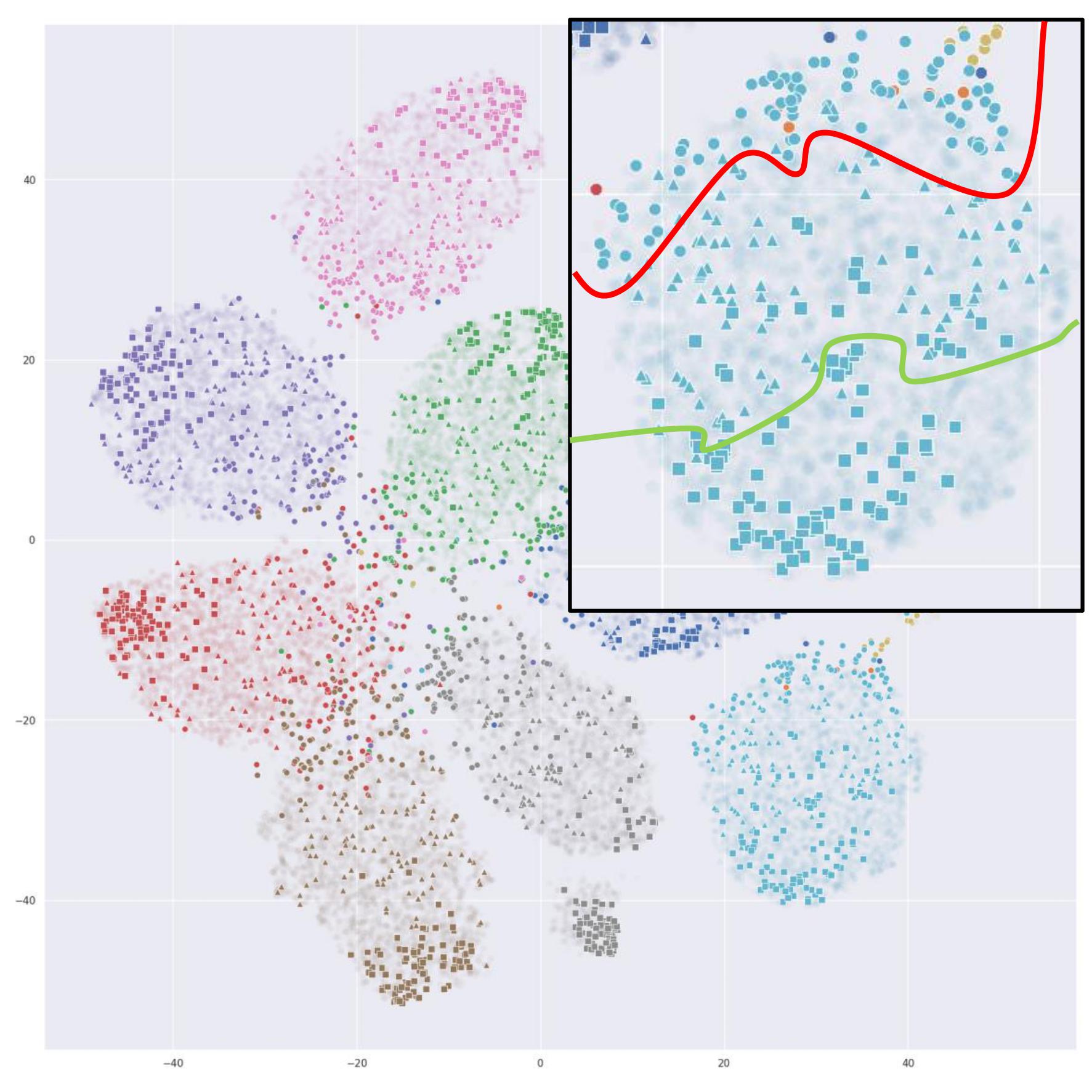

John Seon Keun Yi*, Minseok Seo*, Jongchan Park, Dong-Geol Choi ECCV, 2022 project page / video / code / arXiv We use simple self-supervised pretext tasks and a loss-based sampler to sample both representative and difficult data from an unlabeled pool. |

|

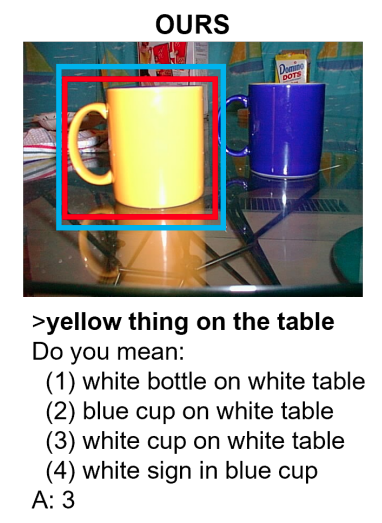

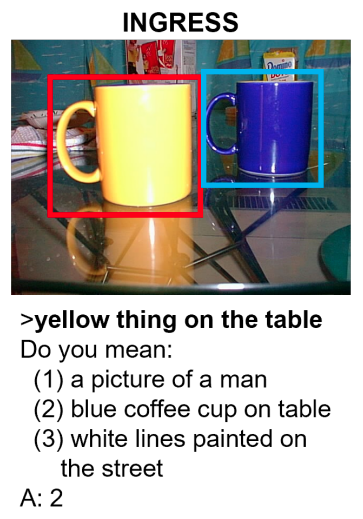

John Seon Keun Yi*, Yoonwoo Kim*, Sonia Chernova arXiv, 2021 arXiv We use semantic scene graphs to disambiguate referring expressions in an interactive object grounding scenario. This is effectively useful in scenes with multiple identical objects. |

|

Jingdao Chen, John Seon Keun Yi, Mark Kahoush, Erin S. Cho, Yong Kwon Cho Sensors, 2020 paper We use 2D inpainting methods to complete occlusions and imperfections in 3D building point cloud scans. |

|

|

|

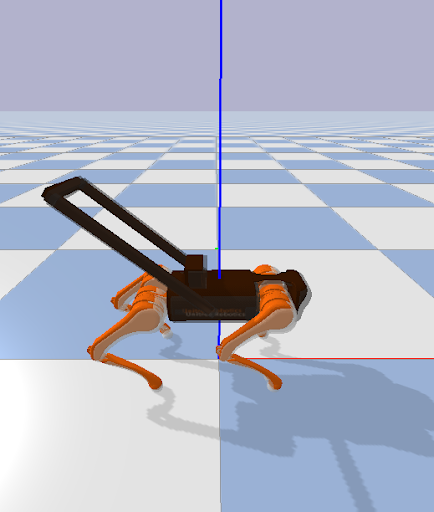

PI: Sehoon Ha, Bruce Walker An HRI model for a guide dog robot that distinguishes different force commands from the attached harness and reacts by adjusting its movement. |

|

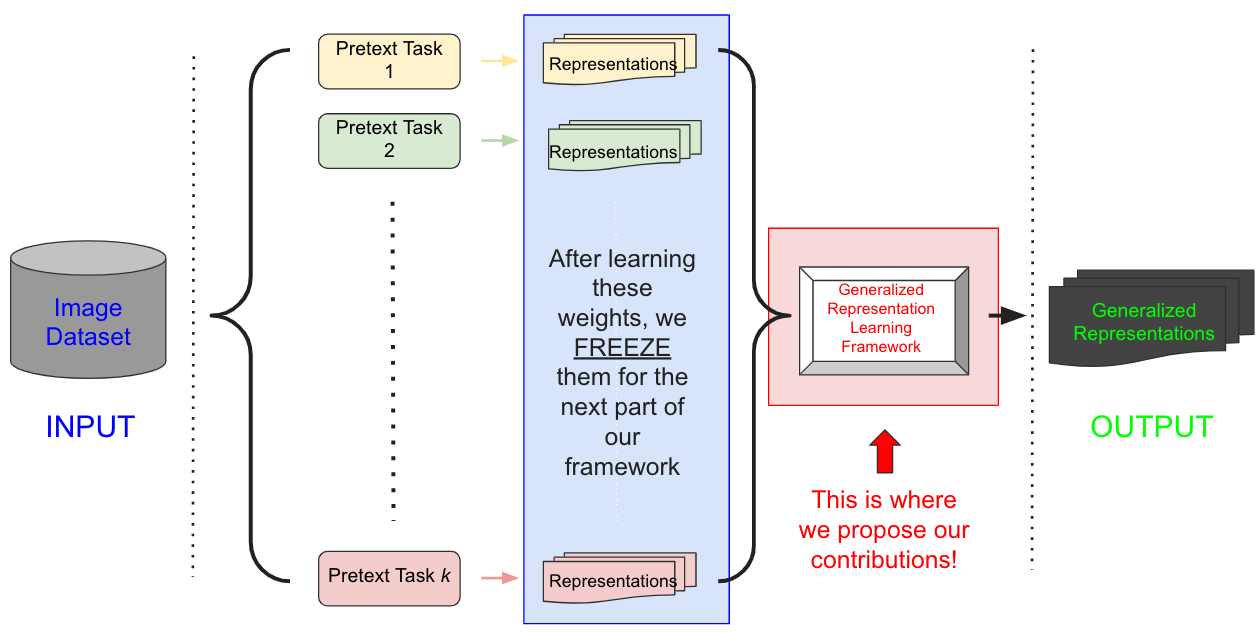

CS8803 LS: Machine Learning with Limited Supervision - Class Project video We try to learn domain agnostic generelizable representations that yields good performance on multiple downstream tasks, by leveraging the power of multiple self-supervised pretext tasks. We demonstrate one of the proposed approaches which uses an ensemble of multiple pretext tasks to make final predictions in the downstream tasks. |

|

|

|

Teaching Assistant: Introduction to Computer Science: CS111 Summer 2025, Summer 2024 Introduction to Artificial Intelligence: CS440 Spring 2025 Software Engineering: CS411 Fall 2025 |

|

Teaching Assistant: Intro to Robotics and Perception: CS3630 Fall 2022, Spring 2022, Fall 2021, Spring 2021, Fall 2020, Spring 2020 |

|

This page is adapted from this template which is created by Jon Barron. |